In the last post Xamarin Sound Effects post we talked about adding sound files. In that post we had talked a lot about using FLAC files as a good format that offered quick response times, great quality at a good compromise for file size.

In this post we will be talking about WAVE files. Why? We are going to talk about generating and displaying our very own wave forms!

Generated Sounds in Xamarin:

WAVE files are the easiest format to work with because you pretty much just make a wave using an array of bytes, shorts or floats, put a few header items in and then you are good to go.

Here is how to generate and play wave forms in Xamarin Forms.

Import nuget packages

Importing the NuGet package Simple Audio Player as we did in part one. to actually play the data that we are generating. This library is nice because it takes a stream. This means we can essentially construct a wave file in memory and fee it to the player!

Import the NuGet package for JaybirdLabs.Chirp or grab the code from GitHub. The package was just created by Jaybird Labs so there won't be many/any users yet. This will allow us to use a premade generator for Sine, Triangle, Saw, Square and White Noise waveforms. You can also override to create your own. More info can be found on the following blog post: Generating Wave Forms

Import the NuGet package for SkiaSharp.Waveform or grab the code from GitHub. This allows us to display the waveform we created by rending to a SkiaSharp canvas.

Setup the Simple Audio Player

This was covered in part one so check that out to see how to setup up in you projects. It's pretty easy to start extending this to feed your own wave forms.

Jaybird Chirp (Generating the sound)

This part is easy. The idea is that Simple Audio Player can take a Stream so we just need to create a WAVE file in memory. The Chirp library will take care of the difficult part. To get started, one of the built-in signal types like the following to generate a 5 second Sine wave at 400 Hz:

Stream GenerateAudioStream()

{

// Genearate the stream in memory and return the amplitude array

var streamResult = new StreamGenerator().GenerateStream(SignalGeneratorType.Sin, 400, 5);

//Save the amplitudes without the header information so we can display to user

short[] amplitudes = streamResult.Amplitudes;

//Return the stream that will be played by Simple Audio Player

return streamResult.WaveStream;

}

From here you simply feed the Stream to the SimpleAudioPlayer

void PlaySound()

{

//Create the cross-platform player

var soundPlayer = CrossSimpleAudioPlayer.CreateSimpleAudioPlayer();

//Call the generation method above

var stream = GenerateAudioStream();

//Load the sound and have it ready to play

soundPlayer.Load(stream);

//Let it rip!

soundPlayer.Play();

}

To make your own sounds, inherit from SignalGenerator and override the Read(short[] buffer, int count) method. From here you are simply filling that array with shorts. Here is an example to make a Sine wave:

public override int Read(short[] buffer, int count)

{

var amplitude = (short) (short.MaxValue * (short) Gain);

for (uint index = 0; index < count - 1; index++)

{

timePeriod = Math.PI * Frequency / WaveFormat.SampleRate;

sampleValue = Convert.ToInt16(amplitude * Math.Sin(timePeriod * index));

buffer[index] = sampleValue;

}

}

That is it! You made your own sound.

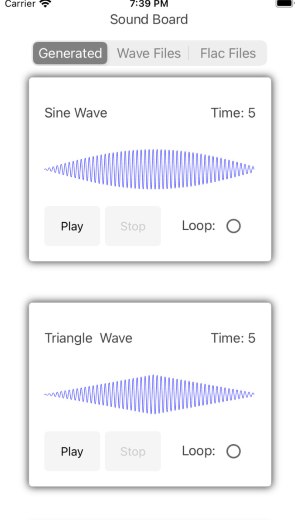

SkiaSharp.Waveform (Seeing the sound)

Using the the work from Tom Alabaster, it is trivial for us to visualize the audio we just generated. We simply take the streamResult.Amplitudes from above, trim down the count to something that would fit on the screen, convert to normalized floats and pass those values in to the WaveForm library.

- Add

SKCanvasViewto your Xaml.. make sure to subscribe to thePaintSurfaceevent handler:

<skia:SKCanvasView x:Name="WaveformCanvas"

HeightRequest="80"

HorizontalOptions="FillAndExpand"

VerticalOptions="FillAndExpand"

PaintSurface="PaintWaveFormCanvas" />

- Convert

short[]to normalizedfloat[]from -1.0 to 1.0:

static float[] ToNormalizedFloat(this short[] data)

{

if (data is null)

{

throw new ArgumentNullException(nameof(data));

}

var result = new float[data.Length];

for (var i = 0; i < data.Length; i++)

{

result[i] = (float) data[i] / short.MaxValue;

}

return result;

}

- Create waveform on code behind of view then pass the normalized float array into the Wave Form Library. Next, signal to the Canvas that you are ready to redraw by calling

InvalidateSurface:

var normalizedAmplitudes = amplitudes.ToNormalizedFloat()

_waveForm = new Waveform.Builder()

.WithAmplitudes(normalizedAmplitudes)

.WithSpacing(5f)

.WithColor(new SKColor(0x00, 0x00, 0xff))

.Build();

WaveformCanvas.InvalidateSurface();

- Draw on the Skia surface:

void PaintWaveFormCanvas(object sender, SKPaintSurfaceEventArgs e)

{

_waveForm.DrawOnCanvas(e.Surface.Canvas);

}

That's it!! There is a fully working sample here on GitHub that has all of the pieces working together.

It really is not as difficult as you might think to get started making your own audio as you might think in a cross-platform way. I am looking forward to seeing a mobile version of Ableton Live that works on all platforms now that you have all the tools!